Table of Contents

Introduction to the Google coverage report and interpretation of its data

What Is The Google Search Console Coverage Report?

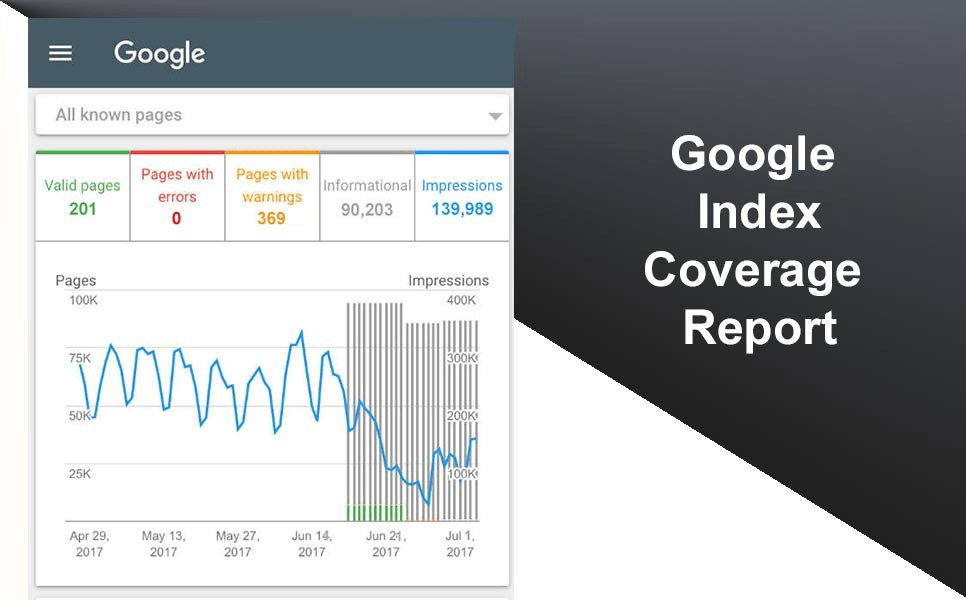

The Google Search Console coverage report provides a lot of information about which pages on your site are indexed. It also lists the problems faced by Googlebot during crawling and indexing.

The main page of the Coverage report groups your site’s URLs by position:

- Error: The page is not indexed. There can be several explanations for this, such as the page responding with a 404.

- Validated with the warning: The page is indexed but faces problems.

- Valid: The page is indexed.

- Excluded: The page is not indexed, Google has followed the rules of your site such as no-index tag in robots.txt, meta, and canonical tags… These rules may prevent pages from being indexed.

This coverage report provides a lot more information than the older Search Console report. Google has really improved the data it shares but there are still some areas that need improvement.

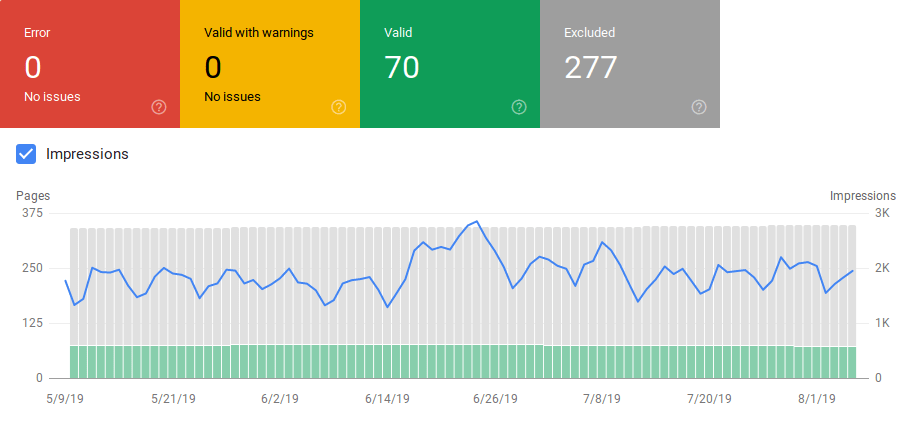

As you can see below, Google shows a graph with the number of URLs in each category. If there is a sudden increase in errors, you can analyze the data and relate it to the impressions as well. This will help determine if your impressions are dropping dangerously due to error URLs or an increase in warnings.

After launching a site or creating new sections, you should see an increase in the number of valid indexed pages. It may take a few days for Google to index new pages, but you can use the URL inspection tool to request indexing and reduce the time Google takes to find your new page.

However, if you see the number of valid URLs declining or sudden spikes appearing, it is important to identify the URLs in the “Errors” section and fix the errors listed in the report.

Google provides a good summary of actions to take when there are increases in errors or warnings.

Google also provides you with information about errors and the number of affected URLs:

Remember that the Google Search Console does not show 100% accurate information. In fact, there have been several reports about bugs or data anomalies. In addition, the Google Search Console takes some time to update. Some data is 16-20 days old.

Sometimes Google Search Console shows lists of more than 1000 error or warning URLs as you can see in the image below. But you can only view or download a sample of 1000 URLs, no more.

However, it is still a very good tool for identifying indexing issues on your site.

When you click on a specific error, you can see the details page which shows examples of affected URLs:

The image above shows the details page for all 404 responding URLs. Each report has a “Learn More” link that links to Google’s documentation providing details about a specific error. Google also provides graphs that show the number of affected pages over time.

You can click on each URL in order to inspect it, which is still similar to the “fetch as Googlebot” functionality of the old Google Search Console. You can also determine if the page is blocked by your robots.txt.

After fixing the URLs, you can ask Google to validate them so that the error disappears from your report. You should repair errors that are in “failed” or “not started” status first.

It is important to mention that you should not expect to see all the URLs of your site indexed. Google has stated that the goal for webmasters should be to have all canonical URLs indexed. Duplicate or alternate pages will be categorized as excluded as their content is similar to that of the canonical page.

It is normal for sites to have pages in the “excluded” category. Most sites will have multiple URLs with no-index meta tags or blocked via robots.txt. When Google identifies a duplicate or alternate page, make sure those pages have a canonical tag pointing to the correct URL. Also, try to find the canonical equivalent of the valid category.

Google has included a drop-down filter at the top left of the report so you can filter the report for all known, submitted pages, or URLs within a specific sitemap. The default report includes all known pages and URLs discovered by Google. Submitted pages include all URLs that you have reported through a sitemap. If you have submitted multiple sitemaps, you can filter by URLs within each sitemap.

Errors, Warnings, and Valid and Excluded URLs

Errors

- Server error (5xx): the server returns a 500 error when the Googlebot tries to crawl the page.

- Redirect error: When the Googlebot crawled the URL, it detected a redirect error. This can be caused by a chain that is too long, a redirect loop, URLs exceeding the maximum URL length, or a null or irrelevant URL in the chain of redirects.

- Submitted URL blocked by robots.txt: The URLs in this list are blocked by your robots.txt file.

- Submitted URL marked with ‘noindex’: URLs in this list have a ‘noindex’ meta robots tag or an HTTP header.

- Submitted URL that appears to be a Soft 404: A soft 404 error occurs when a page that does not exist (has been removed or redirected) broadcasts a ‘page not found’ message to the user but fails to return a status code 404 HTTP. Soft 404s also happen when pages are redirected to irrelevant pages. For example, a page redirecting to the home page instead of returning a 404 status code or redirecting to a relevant page.

- Submitted URL that returns an unauthorized request (401): The page submitted for indexing returns an unauthorized 401 HTTP response.

- Submitted URL not found (404): the page returns a 404 Not Found error when the Googlebot tries to crawl the page.

- Submitted URL with crawl issues: Googlebot detected a crawl error when crawling these pages that don’t match any of the other categories. You need to check each URL and determine where the problem is.

Warning

Indexed but blocked by robots.txt: the page was indexed because the Googlebot found it through external links pointing to this page. However, if the page is blocked by your robots.txt, Google flags these URLs as a warning because it’s not sure if the page should be blocked. If you want to block a page, you can use a ‘noindex’ meta tag or a noindex HTTP response header.

If Google is correct and the URL was mistakenly blocked, you should update your robots.txt file to allow Google to crawl the page.

Valid URLs

Submitted and indexed: the URLs you submitted to Google through the sitemap.xml have been indexed.

Indexed, not submitted in sitemap: The URL was discovered by Google and indexed but was not included in your sitemap. It is recommended that you update your sitemap and include every page you want Google to crawl and index.

Excluded URLs

Excluded by ‘noindex’ tag: when Google tries to index the page, it finds a meta robots ‘noindex’ tag or an HTTP header.

Blocked by Page Removal Tool: Someone asked Google not to index this page using a URL removal query in Google Search Console. If you want this page to be indexed, log in to Google Search Console and remove it from the list of deleted pages.

Blocked by robots.txt: The robots.txt file has a line that prevents the URL from being crawled. You can check which line is responsible using the robots.txt tester.

Blocked due to an unauthorized request (401): as in the “errors” category, these pages are returned with an HTTP 401 header.

Crawl anomaly: this is a “catch-all” category that designates URLs responding with 4xx or 5xx codes. These response codes prevent the page from being indexed.

Crawls: currently unindexed: Google does not explain why the URL has not been indexed. It suggests re-submitting the URL for indexing. However, it is important to check if the page has weak or duplicate content, if it is canonicalized to another page, has a no-index directive, metrics that show bad user experience, or high loading time… These could be reasons why Google doesn’t want to index your page.

Discovered, not currently indexed: The page has been discovered by Google but is not yet included in its index. You can submit the URL for indexing to speed up the process as mentioned above. Google said this is often due to site overload and the crawl needs to be rescheduled.

Alternative page with the canonical tag: Google did not index this page because the canonical tag points to a different URL. Google followed the canonical rule and correctly indexed the canonical URL. If you did not want this page to be indexed, then there is nothing more to do here.

Duplicates without canonical user-selected: Google found duplicates for the pages listed in this category and none use canonical tags. Google selected a different version of the canonical tag. You need to check these pages and add a canonical tag pointing to the correct URL.

Duplicate, Google chose a different user canonical: URLs in this category were searched by Google without an explicit crawl request. Google found them via external links and determined that another page produced a better canonical one. For this reason, Google did not index these pages. He recommends marking these URLs as duplicates of Canonical.

Not Found (404): When Google tries to access these pages, they respond with a 404 error. Google assumes that these URLs were not submitted, they were found pointing to them via external links. It would be a good idea to redirect these URLs to similar pages to take advantage of link equity and ensure to bring users to a relevant page.

Pages Deleted Due to Legal Complaint: Someone complained about your page and mentioned legal issues like copyright infringement. You can appeal a complaint here.

Pages with Redirects: These URLs are redirected and therefore excluded.

Soft 404: As mentioned above, these URLs are excluded because they respond with a 404. Check the pages and make sure the HTTP 404 header is set to be displayed along with a ‘Not Found’ message.

Duplicate URLs submitted but not selected as canonical: This situation is similar to “Google selected a different canonical than the user”. However, the URLs in this category are submitted by you. Check your sitemap and make sure no duplicate pages are included.

How can you use search console data to improve your site?

Looks like Google Search Console > URL Inspection > Live Test incorrectly reports all JS and CSS files as Crawl allowed: No: blocked by robots.txt. Test about 20 files across 3 domains. pic.twitter.com/fM3WAcvK8q

— JR Oakes (@jroakes) July 16, 2019

Working within an agency, I have access to many different sites and their coverage reports. I spent time analyzing the errors reported by Google in various categories.

It helps to find these errors with canonical and duplicate content. However, you may occasionally encounter anomalies such as those reported by @jroakes: Shortly after the release of the new Google Search Console, AJ Koh also wrote a great article. He explains that the real value of the data is in using it to generate a list of each type of content on your site:

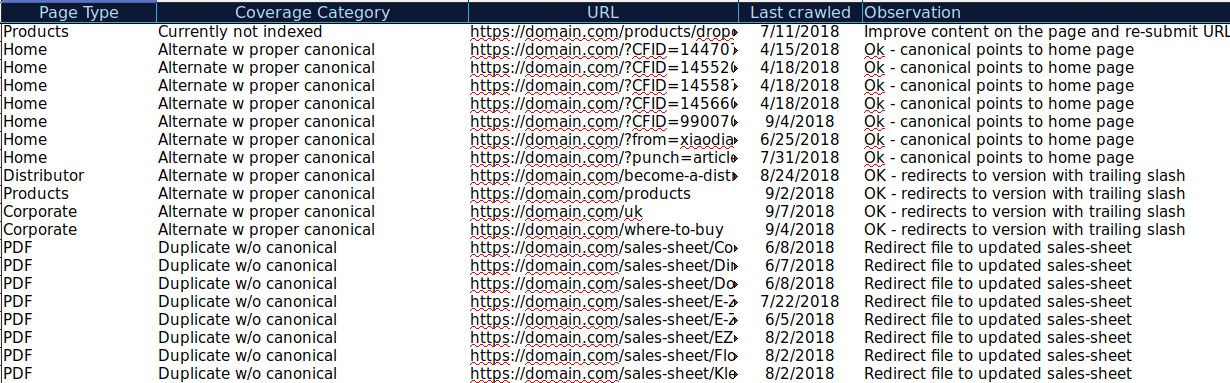

As you can see from the image above, the coverage report includes URLs from different categories of page templates such as blogs and service pages… Help because Google allows you to filter coverage information by sitemap. Then, they included 3 columns with the following information: % of pages indexed and submitted, rate of valid URLs, and % of pages searched.

This table provides a very good overview of the health of your site. If you want to dig into these sections, I recommend that you check out the report and double-check the errors Google is reporting.

You can download all the URLs in different categories and use OnCrawl to check their HTTPS status, canonical tags… and create a spreadsheet like this:

Organizing your data this way can help you track issues and implement solutions for URLs that need to be fixed or improved. You can also check which URLs are correct and do not need correction.

You can also add more information to this spreadsheet from other sources such as ahrefs, Majestic and OnCrawl integration with Google Analytics. This will allow you to pull links, traffic, and conversion data for each URL into Google Search Console.

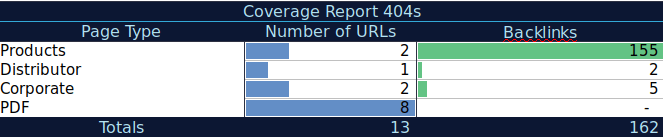

All this data can help you make better decisions for each page. For example, if you have a list of 404 pages, you can cross-reference this with your backlinks data to verify that you are not losing link equity from domains that link to broken pages on your site. Huh. Or you can check the indexed pages and the amount of organic traffic received. You can identify indexed pages that are not getting organic traffic and you can work on optimizing them (improving content and usability) to drive more traffic to that page.

With this additional data, you can create a summary on another worksheet. You can use the formula =COUNTIF(category, criteria) to calculate the URL on each type of page (this table can be a complement to the table that AJ Khon suggested above). You can also use another formula to sum the backlinks, visits, or conversions extracted from each URL and show them in a summary table with the following formula =SUMIF(category, criteria, [sum_rang]). You will get something like this:

I really like working with summary tables that provide an overview of the data and can help me identify the sections I need to focus on.

The Bottom Line

When fixing issues in the coverage report and analyzing the data, you should think about: Is my site optimized for crawl? Are my indexed and valid pages increasing or decreasing? Are erroneous pages rising or falling? Am I allowing Google to spend time on URLs that will bring more value to my users or are they looking for a lot of junk pages?

With these answers, you can start applying improvements to your site already. Googlebot can spend more crawl budget on a page which can provide more value to users instead of crawling unnecessary pages. You can use robots.txt to improve crawl functionality, remove unnecessary URLs if possible, or use canonical or no-index tags to avoid duplicate content.

Google regularly adds updated features and data to various Google Search Console reports. Hopefully, we’ll continue to see more data in each category in the coverage report as well as in other Google Search Console reports.

If you really find this content useful. then don’t forget to share and leave your thoughts Thank you.